SEO is not a “one size fits all” solution for all websites and B2B businesses. Each website and business is different, therefore you might come across multiple issues that require different solutions and a level of expertise to achieve the optimum optimisation for your website. In particular, with the December 2020 Google’s core algorithm update, it’s key to consider SEO as part of your marketing strategy for your website to keep on winning on the search results pages. Before we jump straight to fixing problems, you need to understand the issues that occur and your site and how to identify them. We have put together a list of the 6 most common SEO issues that we see in our day-to-day practice.

- Badly optimised title tags, meta descriptions, URLs and headings

- Duplicate content & pages

- Word count

- Missing alt text & large image size

- Broken links

- Missing indexability & crawlability features

1. Badly optimised title tags, meta descriptions, URLs and headings

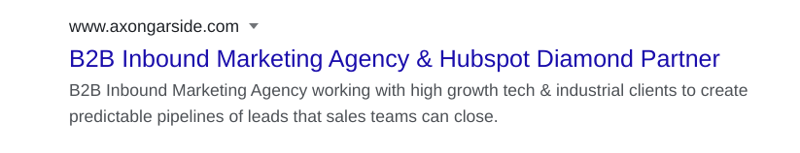

Title tags, meta descriptions, URLs and heading tags all play a huge role in the way that your website is seen by search engines. After detailed keyword research, it is crucial to include your target keywords in the title, description and URL of the page which will appear on the search results pages. Headings 1 to 6 also influences the indexing process of your web pages.

Very often we come across websites with title tags, meta descriptions and URL structures that are either too short or too long. As well as pages with no H1s or multiple H1s. The reason why it is important to get the main heading on your website right is that it is of the highest importance to the search engines bots that crawl through your site. To make that process easy make sure you include your main targeted keywords in the H1 tag as well as other features of the metadata. It is recommended that the title tag should be around 50-60 characters and the meta description should be 150-160 characters. Even though meta descriptions don’t get indexed by the search engines, they are an informative feature to let your audience know what to expect when they visit your page. The URL structure of the page should include your main keyword and be relatively simplistic to let your audience and the search engine know what content to expect on the page.

2. Duplicate content & pages

Duplicate content refers to content and pages that appear on your website internally or on someone else’s website externally more than once. Copying someone else’s content is a bad practice in the digital marketing industry. Also, having duplicate content on your site can impact the search engine rankings. According to Google similar content in more than one location can cause difficulties for the search engine to decide which version of the website is more relevant to the users search intent and query.

We understand how difficult it is to think about something new to write when the topic has been covered thousands of times on the Internet. However, original content about your services, products, news and blogs is crucial in achieving higher rankings. Even the topic for this blog “common SEO issues'' has 61,000,000 results on Google, yet we still write about it as it relates to our experiences in the B2B inbound marketing industry.

3. Word count

Traditionally, it was recommended that you should always have a minimum of 300 to 350 words of content on all your website pages. If you look at the top 5 pages for the topic you are looking into, you might find that pages with around 2,000 words rank the highest. However, since the search engines are getting smarter and more advanced word count is no longer about the quantity but the quality. The copy that you publish on your website pages, pillar pages or blogs should focus on the user and answering their search intent. Inbound marketing offers strategies that focus on the user journey in order to steer them to the page that will answer their query and ultimately lead to converting the user into a marketing qualified lead.

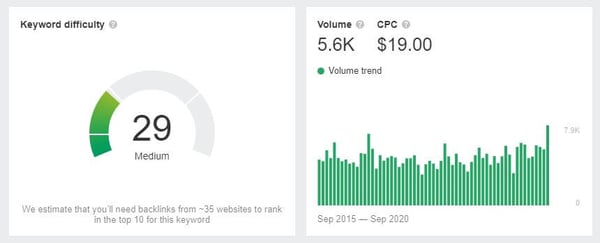

From an SEO perspective, the quality of the content also relies on keyword density and keyword targeting. If for example, your company offers B2B tech services it is key that the copy you add on your website targets the relevant keywords depending on what you want people to find you for. If you offer “IT support” this keyword has a medium difficulty of 26 out of 100 and a high volume of monthly searches which stands at 5,600 according to Ahrefs’ data.

4. Missing alt text & large image size

Alt text is part of HTML code that describes the appearance and the function of any picture present on your website. The addition of alt text improves the SEO, as search crawlers are able to read the code in order to “see” the image, unlike humans, they are not yet able to see the image the same way as we do. Not only that, alt text helps with accessibility as visually impaired users will be able to hear the alt text that will describe to them what the image shows. The best practice for setting up the alt text is to add a description of the image but also the keyword or keyword phrase that you are intending to target. Implementing the alt text tag depends on the CRM that your website was built on. HubSpot makes it very easy to add the image alt text whenever a new image is uploaded to the content on your website.

The optimisation and compression of the images is also key factor to keep in mind for SEO. Large files and images can cause slow load time of your website and lead to a bad user experience resulting in a high bounce rate. When looking for tools or plugins to compress your images it is important to use tools that do not compromise the quality of your images.

5. Broken links

Have you ever found yourself visiting a page and landing on 404 error? We can guess that you were probably frustrated by that experience. 404 error page is triggered when the page no longer exists on your website. Not only do broken links put off the users from staying on your website but they can also have a negative impact on SEO. There are two types of links that should be monitored for their capabilities: internal and external links. Internal links refer to links on your website pages that you can easily control, however, external links point to another website from yours and it can be a bit more tricky to notice when they aren’t working. Ahrefs and Screaming Frog offer tools that indicate internal and external broken links that appear on your website.

Once you identify broken links on your site, the solution to fixing them is to either remove them or replace them with links to better or current content. This can be time consuming especially if you have a large website. However, it can benefit your site in many ways like reducing bounce rate, increasing your rankings, and removing the roadblock in your user journey resulting in better leads and revenue.

6. Missing indexability & crawlability features

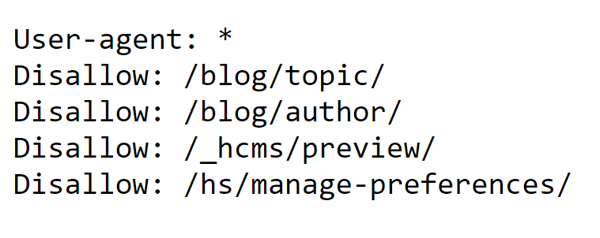

This is where SEO can get a bit more technical, especially to someone who has less experience in the field. Two things that make the way that search bots crawl your website easier are robots.txt and XML Sitemap. Quite often, we have come across websites where these indexability features are out of date or completely missing.

Robots.txt unblock or block search bots access to the pages that you want or don't want them to see. Some pages like the author’s page or customer login area are not necessary for the search engine bot to see, so it is worth setting them to “disallow” in the robots.txt file.

XML sitemap is a file that includes all of your website’s URLs. It is essentially a roadmap that helps to tell the search engines what content is available on your site and how to search it. However, it is important to point out that search engine bots index your website because they found them and crawled them and also it is the bots who decide that your website has links worthy of indexing. However, having an XML sitemap will assist your Google Search Console and let it know that there are pages on your site that you think are worth the search engine’s attention. To create an XML sitemap file you can use various online tools. Most commonly, your website’s CRM should have a tool to create the XML sitemap.

XML sitemap is a file that includes all of your website’s URLs. It is essentially a roadmap that helps to tell the search engines what content is available on your site and how to search it. However, it is important to point out that search engine bots index your website because they found them and crawled them and also it is the bots who decide that your website has links worthy of indexing. However, having an XML sitemap will assist your Google Search Console and let it know that there are pages on your site that you think are worth the search engine’s attention. To create an XML sitemap file you can use various online tools. Most commonly, your website’s CRM should have a tool to create the XML sitemap.

This is just the tip of the SEO iceberg. If you want to learn more about this subject, download our Ultimate Guide to SEO here.

.png?width=115&height=183&name=sade%201%20(1).png)